Dec 24, 2013 Backpropagation Algorithm Implementation. A typical program would be. I have trouble implementing backpropagation in neural net. Along time ago, I wanted to implement neural network with programming language, but I can't. From your article and source, I wanna study method how to implement. Backpropagation is a supervised learning algorithm and is mainly used by Multi-Layer-Perceptrons to. After the forward and backward propagation of the second. Iso Auf Usb Stick Schreiben In Alltag.

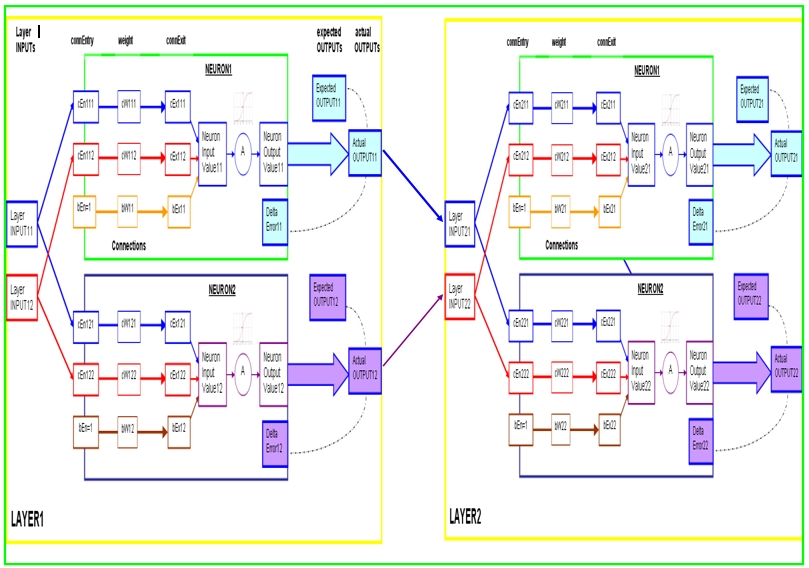

I've recently completed Professor Ng's Machine Learning course on Coursera, and while I loved the entire course, I never really managed to understand the backpropagation algorithm for training neural networks. My problem with understanding it is, he only ever teaches the vectorised implementation of it for fully-connected feed-forward networks. My linear algebra is rusty, and I think it would be much easier to understand if somebody could teach me the general purpose algorithm. Maybe in a node-oriented fashion. I'll try and phrase the problem simply, but I may be misunderstanding how backprop works, so if this doesn't make sense, disregard it: For any given node N, given the input weights/values, the output weights/values, and the error/cost of all the nodes that N outputs to, how do I calculate the 'cost' of N and use this to update the input weights? Let's consider a node in a back-propagation (BP) network.

It has multiple inputs, and produces an output value. We want to use error-correction for training, so it will also update weights based on an error estimate for the node. Each node has a bias value, θ. You can think of this as a weight to an internal, constant 1.0 valued input. Bagaimana Mencari Kawan Pdf Printer there. The activation is a summation of weighted inputs and the bias value. Let's refer to our node of interest as j, nodes in the preceding layer with values of i, and nodes in the succeeding layer with values of k.